Understanding the customer’s experience is more complicated than it has ever been. There’s so many different ways that customers can tell you what they think and feel; from App Store and GooglePlay reviews, to Trustpilot, Feefo, all manner of social media and more. This ever growing list of ways for customers to proactively express their views now complements traditional channels such as surveys and customer support queries.

Despite the way the narrative is sometimes presented, responding to surveys is still a thing, people still do it. It’s just that surveys now form one part of an ecosystem. Nowadays, understanding customers means having the intelligence architecture in place to listen to customers throughout their journey, regardless of where or how they choose to provide feedback.

A key part of the strategy team’s role here at Chattermill is to advise on how and where data should be collected. We work with organisations to ensure that both the coverage and the quality of the feedback that is gathered is fit for purpose.

Artificial intelligence is incredible, but it’s not magic. As with any process or machine, flawed inputs lead to flawed outputs. Garbage in, Garbage out is just as true as it has ever been.

Balancing the need to understand the experience with the need not to annoy customers can be tricky. There’s a surprising amount of nuance involved. Often however, this nuance only becomes clear after mapping out how and where feedback is proactively requested or reactively collected across the journey.

A lot (but certainly not all) of the organisations we work with have a joined up survey strategy in place. A joined up ‘intelligence strategy’ is also increasingly the norm.

Reflecting back on the last decade or so, it’s fair to say that in general, the standard of how organisations understand their customers has improved.

However a survey that I recently received from a well known hotel chain reminded me that there is still a long way to go.

The specific organisation shall remain anonymous, the purpose of this is not to name and shame but rather to use it as an example of how not to do things.

To put it bluntly, there is simply no reason why a survey like this should still be in use.

On the plus side, this survey is an incredibly thorough example of how to do it badly. It is suboptimal in so many different ways that it provides a fantastic case study of what not to do, all in one convenient place.

So let's take a look at some of the failings and how they illustrate what should be done.

1. The timing of the survey trigger

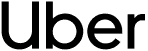

We can start with when the survey is triggered.

I’m about to make a purchase, the sole purpose of a digital transaction - the very reason the company has invested in advertising to get me to their website (not to mention why they have dedicated countless hours to improving the digital experience to drive conversion) - but then, just as I am about to pay, a survey pop up appears, getting in the way of me completing payment.

Even when I agree to take the survey, I’m then met with another pop up - another barrier to me making the payment - something else I need to read, understand and decide what to do with (move out the way? minimise? cancel?) before I can get back to completing my booking.

This is nothing short of madness. I’m trying to pay and they’re making it harder for me to give them money.

How and where a survey is triggered is incredibly important. It is key to be intentional and approach it from a holistic perspective. You need to look at the customer journey and how you can understand it without damaging the experience or impacting the metrics the business cares about. There are a number of nuances and subtleties to doing it really well, but a survey should not be a barrier to completing a purchase in this way.

Failing: The survey pops up right before payment, interrupting the transaction process and potentially deterring customers from completing their purchase.

Solution: Trigger the survey after the transaction is complete. This respects the primary goal of the visit and captures feedback without hindering the purchase process.

2. The length of the survey

There are 2 issues here; the first is how the survey is introduced. On the initial pop up and at the top of the survey form itself the survey is introduced as a ‘short survey’. Yet the survey is 37 questions long. If that’s a short survey what’s a long one? This is misleading and sets expectations in the wrong way.

The second issue is the actual length of the survey. 37 questions is simply too long, it is a dreadful experience for the customer and it doesn’t respect their time.

Failing: The survey is introduced as "short" but contains 37 questions, misleading respondents and contributing to survey fatigue.

Solution: Be transparent about the survey length and reduce the number of questions significantly. Limit surveys to 2-5 questions to maintain engagement and ensure data quality.

3. Survey design and validity

The length of the survey also calls the validity and actionability of the results into question.

Such a poor survey design is likely to mean that only a very small proportion of people will bother to complete it. This minimises the cross section of customers who provide feedback. Effectively you will only gather the views of the small segment of people who are prepared to complete a 37 question survey.

In addition to only collecting the views of this small proportion of customers, such poor survey design also increases the chances that the results may not even capture what they think. Respondents are likely to get bored and look for ways to get through it as quickly as possible (such as clicking the first option or always selecting the same score).

The extent to which the results are robust and reliable in terms of decision making is therefore questionable.

Failing: The excessive length and poor design of the survey can lead to unrepresentative results due to respondent fatigue and rushed answers.

Solution: Focus on key questions that provide actionable insights. Use pilot testing to identify and remove redundant or unclear questions.

4. Relevance and clarity of questions

People’s time is precious and you have a limited amount of attention from customers to gather information. How and what you choose to ask about is crucial, you simply can’t ask about everything. You have to prioritise which questions to ask.

Taking Please rate the consistency of speed from page to page? as an example from the survey. This is simply not the optimum use of the limited time and attention that your customers will grant you. Firstly, it is questionable how many people are accurately keeping track of this when they are trying to book a hotel. Secondly, what are you going to do with this information? If a customer scores you a 7 what does that mean? Might it not be their internet connection or other factors that could be influencing speed here?

Likewise for the question Please rate the balance of graphics and text on this site?

Failing: Many questions are irrelevant or confusing to respondents (e.g. load speed consistency, balance of graphics and text).

Solution : Evaluate each question's relevance to the overall survey goal. Ensure that questions are clear, concise, and directly tied to actionable business outcomes. Avoid technical questions that respondents are unlikely to be able to answer accurately.

5. Question wording

Following on from the choice of questions (i.e. which questions to ask) the next thing to be aware of is how those questions are asked. Questions should be simple and easy to understand.

Question 11 almost feels deliberately confusing “Please rate the ability to see the desired views of the hotel rooms you reviewed”.

A number of other questions also lack clarity. There should be no need for multiple read throughs or interpretation on the part of the respondent.

Failing: Some questions are poorly worded and confusing, which can result in unreliable data.

Solution: Use straightforward, unambiguous language. Pre-test questions with a small audience to identify and rectify any confusion.

6. Inappropriate personal questions

There is a delicate balance to be struck when it comes to asking for additional information from customers. There is admittedly subjectivity here, but typically customers have a sense of whether a question feels legitimate. As a general rule of thumb the concept of improving the experience in some way is a legitimate basis for questions. Whereas requesting additional personal information that has no relevance to the current experience is more debatable.

Requesting the number and ages of children in my household is a question that feels irrelevant and much more for the organisation’s benefit (e.g. to support future marketing efforts) rather than to improve my experience in some way.

Failing: Questions about personal information, such as the number of children under 11, can be seen as intrusive and irrelevant.

Solution: Ensure all questions are relevant to the survey’s objective and respect customer privacy. Avoid asking for sensitive information unless absolutely necessary and justified.

7. Actionability of data collected

Ensuring that the responses that are collected are as actionable as possible is another key element of effective survey design.

The survey asks “How likely are you to recommend the website to someone else?”

It also asks “How likely are you to make a booking on the website the next time you are travelling?”

For both of these questions the key thing is to know why. Why would the customer be either likely or unlikely to make a booking next time?

The follow up question ‘Why did you give that score?’ is what augments the score and provides the intelligence that can be actioned.

Failing: Many questions are not actionable.

Solution: Ensure each question provides actionable insights. If a response isn't something the company can act on directly, reconsider its inclusion. Focus on questions that will lead to tangible improvements in customer experience.

8. Placement and wording of open-ended questions

As per the previous point the opportunity to let customers provide their own opinion of the experience is fundamental.

However in this survey the only chance customers have to provide their own perspective is buried 34 questions into the survey.

This ratio of 36 closed questions to just 1 open question is a sign that an organisation has fundamentally misunderstood how to understand the experience of the customer. The right approach is not to assume that you know what is important to customers and then ask closed questions to measure these things. Instead the right approach is to start with letting the customer tell you what they think is important.

Furthermore the one time an open ended question is included it is limiting in the form of sentiment bias. By asking only for what could be improved, respondents are steered away from giving their opinion on the holistic experience.

Failing: The only open-ended question is placed too far into the survey and is phrased to bias responses towards improvements rather than overall feedback.

Solution: Introduce open-ended questions earlier in the survey to capture spontaneous feedback. Use neutral wording to encourage honest and comprehensive responses (e.g. “Please tell us why you gave that score?”)

9. Collection of data already available

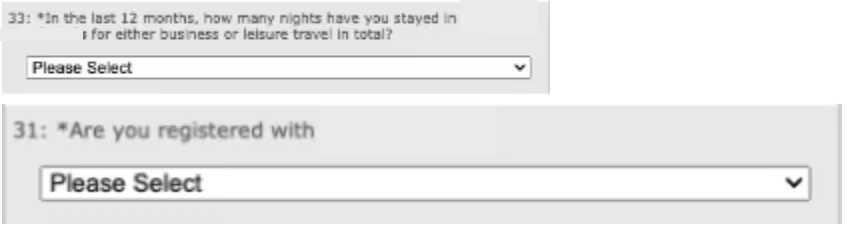

Another example of failing to respect a respondent’s time. Questions such as whether I am registered with their organisation or how many nights I have stayed in their hotels over the last 12 months are things that shouldn’t need to be asked.

This should be information that the company already has, responses should be enriched with these additional transactional data points behind the scenes by connecting feedback to the other information that is known about me as a customer (e.g. by automatically cross referencing my email address or other unique identifier).

Failing: Asking for information that the company already has or can easily obtain (e.g., customer registration status, number of stays) is redundant and annoying for respondents.

Solution: Utilize existing customer data to pre-fill information or avoid asking these questions altogether. Focus on gathering insights that can't be derived from existing data.

10. Overall user experience

The combination of the failings above makes for a very poor feedback experience.

It is so often forgotten that the Customer’s experience of an organisation is their holistic experience. It is the entirety of their interactions with that business and that includes the survey experience.

Providing a poor survey experience (while attempting to improve the experience) is the very definition of shooting yourself in the foot.

Failing: The survey provides a poor experience, conflicting with the aim of improving customer satisfaction.

Solution: Design the survey experience to be as user-friendly as possible. Ensure the survey is mobile-friendly, quick to complete, and non-intrusive.

How does this happen & how to avoid it?

This whole survey reads as though it was designed by a group of people from different departments who sat down in a room and said “we would like to know this and this and this and this…”

“We want to be able to measure how quickly our pages are loading in case there is an issue, we want to be able to measure how well the images are loading too, we want to know if a customer is a part of a family and how many kids they have so we can market family offers to them…etc”

It is understandable, but to do this is to approach the problem from the wrong perspective and it inevitably leads to a dreadful outcome.

Fortunately all that is required to address the issue is to shift to a customer centric approach. Start with the customer and ask:

“How can we best understand the customer’s experience from their point of view?”

“How can we ensure that the experience of providing feedback is as good as possible?”

“How can we ensure that we respect their time and therefore gather as broad a cross section of views as possible?”

Switching to this mindset of understanding the customer’s perspective and what is important to them is the major step to take to guide your survey design and to deliver infinitely better surveys.

It’s rare to end this blog with a call to action (in fact I don’t think I’ve ever done it before) but if you’re still sending out surveys that look anything like this (or you’re unsure & considering something similar!) please do get in touch for a quick chat.

Getting this right from the beginning will save both you and your customers a whole lot of wasted time, effort and frustration.

.png)