Have you ever received an email from a company with something like “Tell us what you think” in the subject line. I receive one almost every day. Everyone from my phone provider to my calendar app seems to be interested in my opinion. Sounds great, I love giving my opinion. And yet I almost never click on the survey link and when I do, I usually close the window straight away.

The reason I hate surveys is they take a lot of time and are incredibly boring. I might have an opinion on your app and I might even be happy to share it but I definitely don’t want to answer a series of questions you chose for me, most of which I don’t care about. Quite often I get emails that say something like this:

No, I don’t have 10 minutes for you. And even if I do spend that much time on one company, I definitely will not fill any other survey for months.

So, how did we get here? Market research is huge because companies do need to know what customers think and survey is the only scalable way to find out this information. Yet surveys are a relict of the past era when working with data was very hard. They are usually designed to make the research analyst’s life bearable. If some engagement is sacrificed as a result, well, so be it. Companies like SurveyMonkey built successful businesses out of what is in a nutshell digitizing a traditional clipboard-type surveys that you still sometimes see on the street. Yet, we can do much better than that.

At Chattermill we want to re-imagine a traditional survey using 21-st century technology. With good tools we can both give the respondent a better experience and make better use of the analyst’s time. Much in the same way Uber gives us both cheaper rides and superior customer experience. Here is how we do it:

Treat Respondent Like A King

We believe in better ways of collecting feedback than asking your customer to spend 10 minutes of their time on your boring multiple choice survey. A monetary reward or a slightly nicer interface (such as Typeform) isn’t the solution either.

We solve the problem by asking the user two simple question. One closed question such as Net Promoter Score (“How likely are you to recommend Company X to a friend?”) and a follow-up open ended question to allow the respondent to feedback what is most important to them. The key is to keep it simple. There is no point in asking a very specific question if the customer doesn’t care about it enough to have a strong opinion. Let the customers speak in their own words and tell you what’s important to them.

Let Machine Do The Work

We talked to quite a few people in the field and one theme that comes up in every single conversation is that open-ended feedback is much more valuable that your typical multiple choice question. Yet, very few research studies make full use of open ended feedback as quantifying it is such a pain. For early stage startups this is usually feasible but the further you grow, the harder it becomes to keep everything consistent and organized.

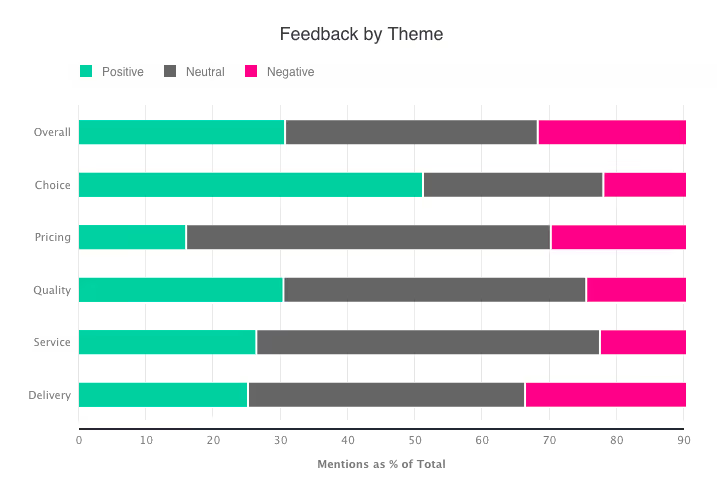

We apply the latest in natural language processing to break the responses down into specific themes. Let’s say you are an online shop. You might be interested in seeing what the people are saying about your delivery, product choice, quality, prices, customer service etc. Each of these themes can get a score based on user sentiment. The same can be done for specific products / services you offer. As your data grows and changes you can build a much more detailed categorization. This gives you an instant view of what is important to customers and how they feel about each item on the survey.

You do need a somewhat large sample to make this work but the same is actually true of traditional surveys where statistical significance is quite often underappreciated.

Quantify All The Things

Researchers often talk about qualitative and quantitative methods. Our approach gives you the best of both. You may think you have a good understanding of your data from manually going through all their answers but in reality it is really easy to become biased. Even for a few hundred open ended responses you will die of boredom if you have to score each one on a scale. For computer this is not a problem. The scale itself may be slightly arbitrary but at least you get consistency out of the box. What this means is you can run a survey each day / week / month and build a set of metrics easy for your stakeholders to understand.

Interested to find out more? Just get in touch we’d love to hear from you.

.png)